What are interactive sensors and how we apply them in the creation of audiovisual experiences where the user interacts with the content through movement, touch or proximity.

From the very beginning, interaction has been an essential part of our evolution. We use our hands to explore, discover, and manipulate our surroundings. Today, this need for interaction moves into the digital world through interactive sensors, devices that enable spaces to respond to movement, light, or sound, creating immersive experiences that previously seemed impossible.

What are Interactive Sensors?

Interactive sensors are devices designed to capture environmental data and respond in real-time. Thanks to these sensors, users can influence digital content through movement, proximity, voice, light, or touch.

These devices contain RGB cameras, projectors, and infrared detectors, allowing depth mapping through structured light or Time-of-Flight calculations. This technology facilitates real-time gesture recognition and body skeletal detection, paving the way for more natural and immersive interactive experiences.

Among the most widely used sensors in the industry are:

- Touch sensors (touch surfaces, interactive screens)

- Movement and depth sensors (Kinect, Intel RealSense, Lidar)

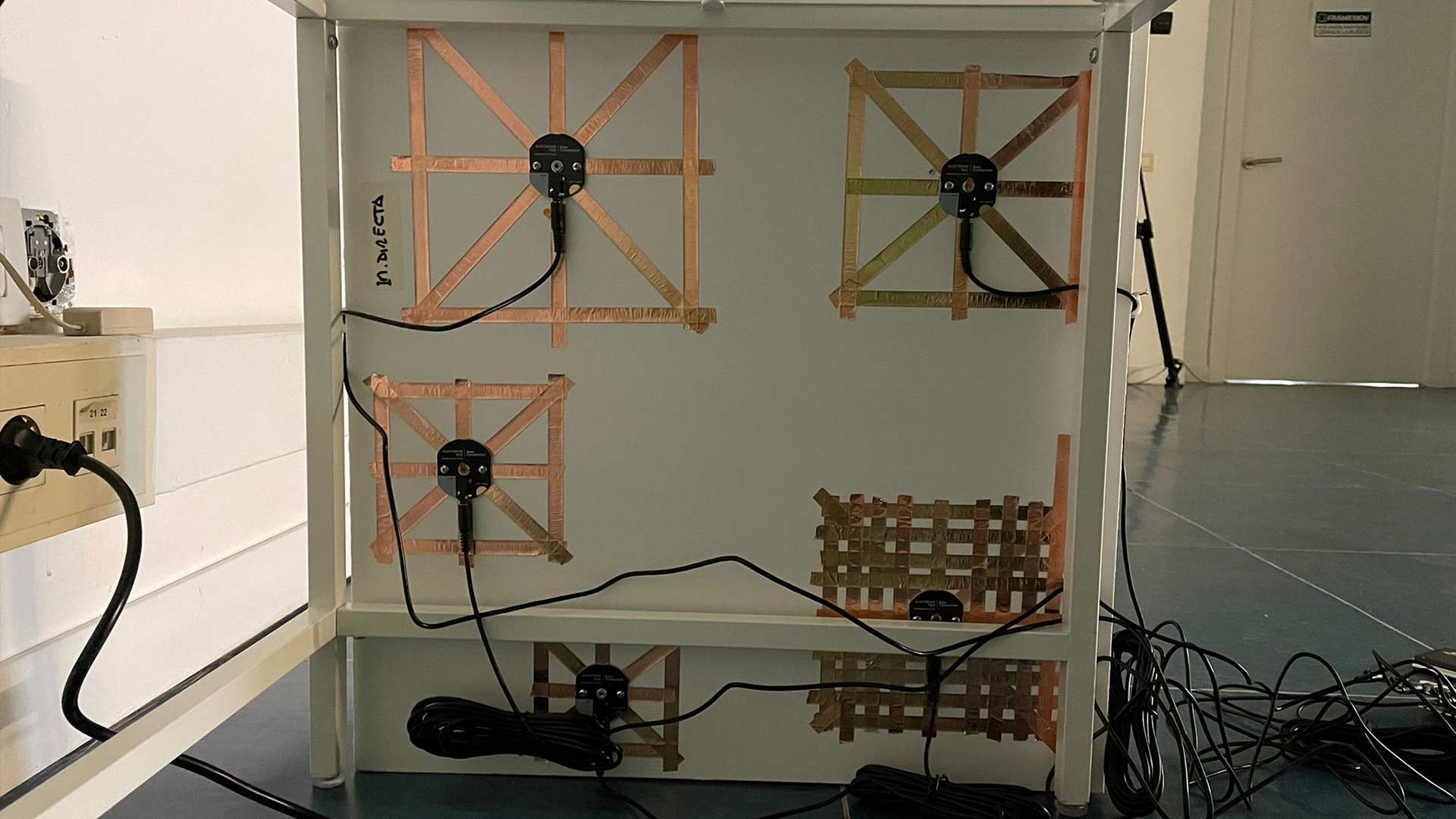

- Proximity sensor: (hardware de rehabilitem)

- Sound and light sensors (voice detection, reactive lighting)

Interactivity as Part of the Experience

Interactive sensors have opened new possibilities in the audiovisual world. It’s no longer just about observing but becoming part of the artwork, influencing what happens within the digital space. In this context, interactive sensors become a key tool for designing experiences that blur the boundary between physical and virtual spaces.

At Framemov, we explore the potential of these systems to create environments where users don’t just receive information but generate it. The ability to move, touch, or provoke real-time reactions strengthens the connection between technology and the viewer’s emotions, resulting in memorable immersive experiences.

Applications of Interactive Sensors in Our Projects

Over the years, we have integrated interactive sensors into various digital experiences. Some of our most notable projects include:

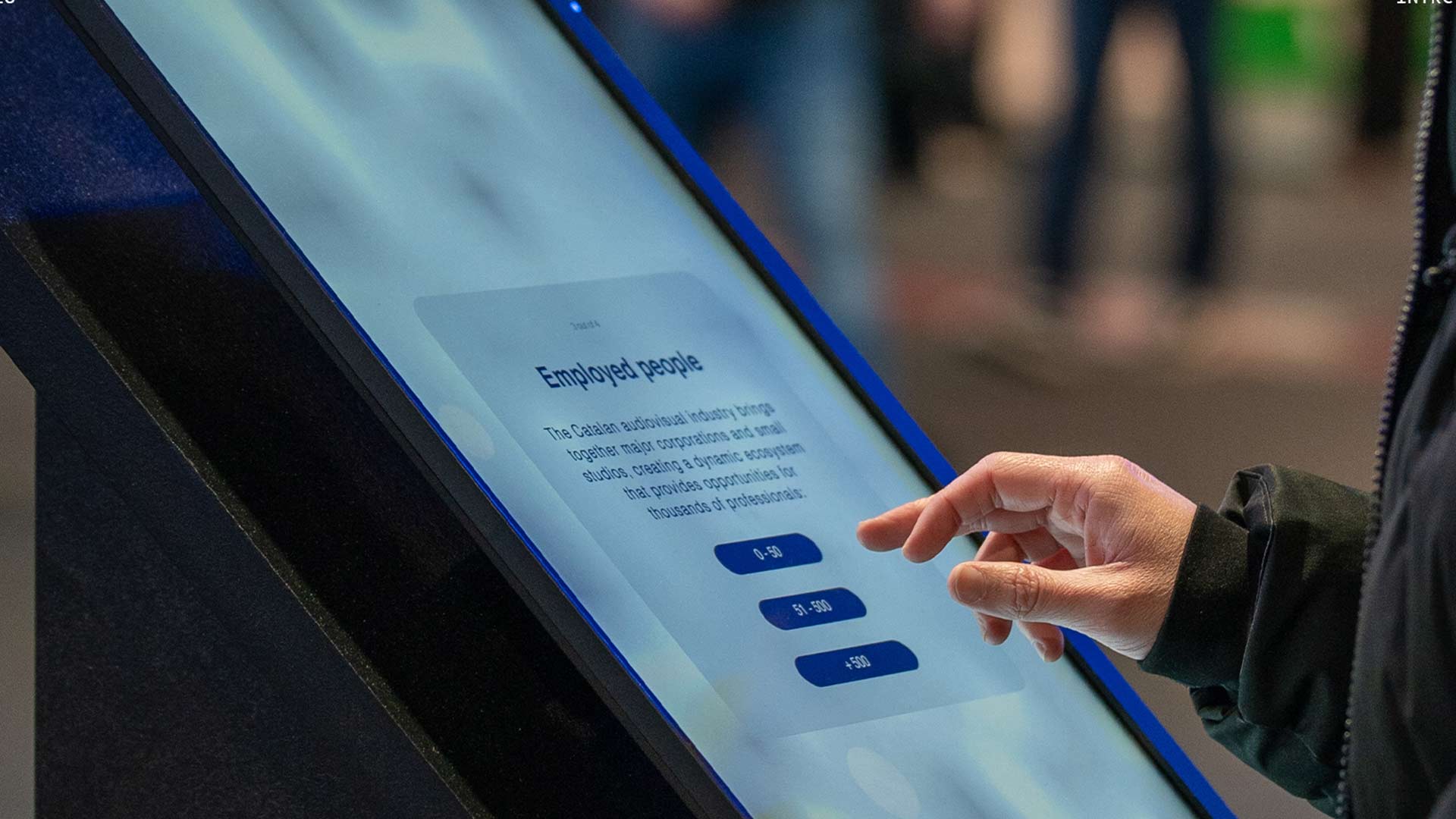

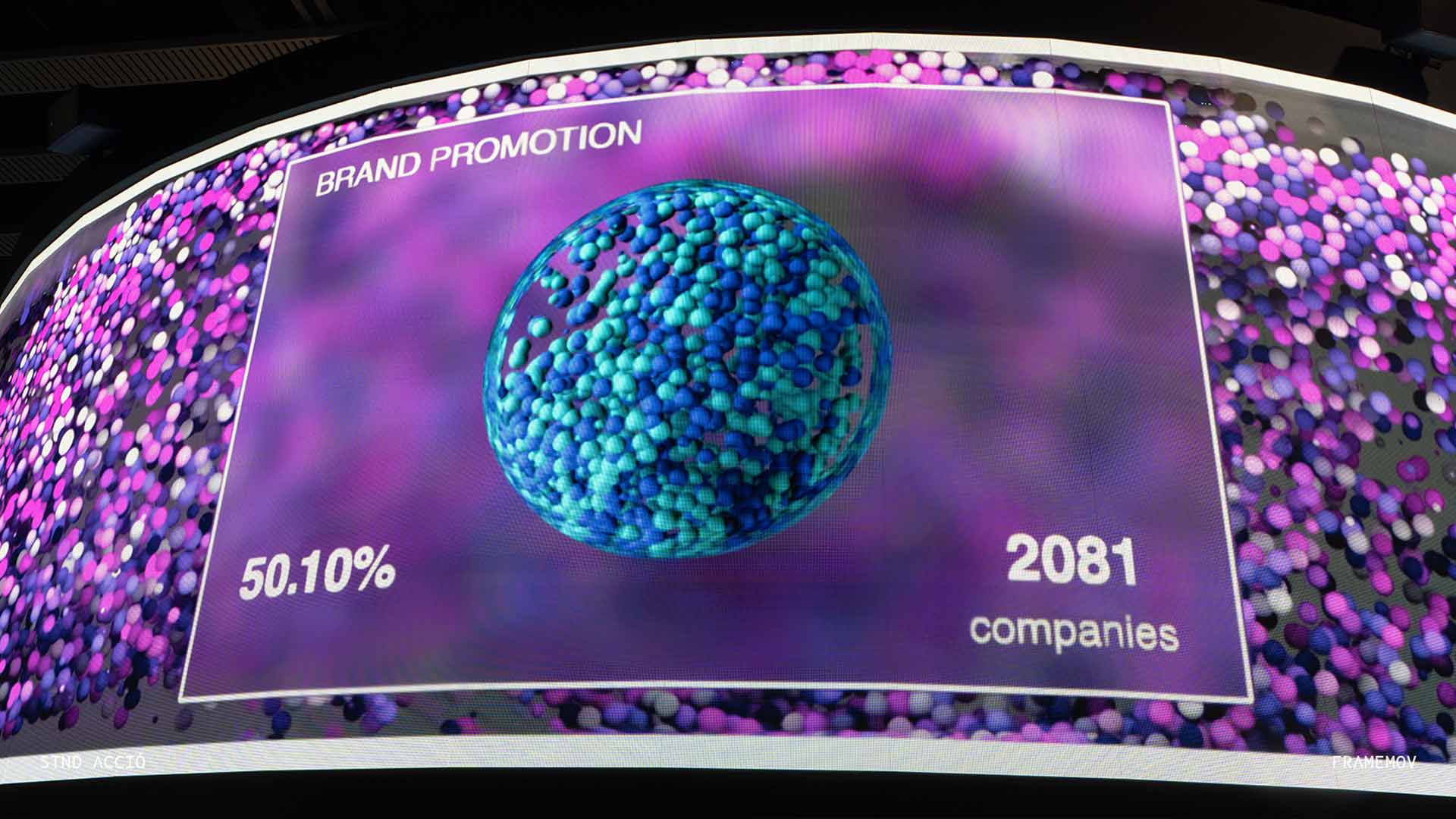

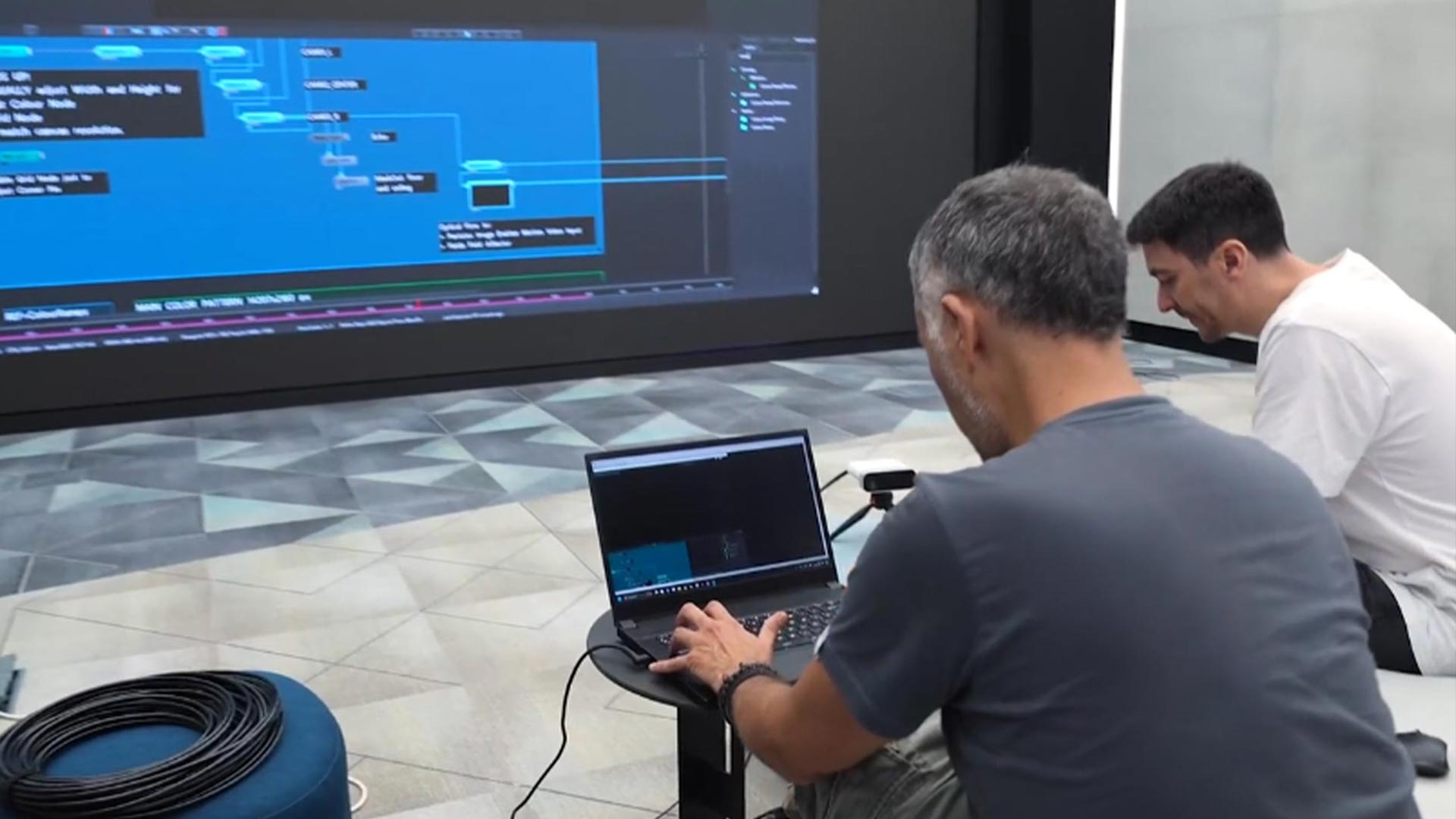

1. Stand Acció – ISE 2025

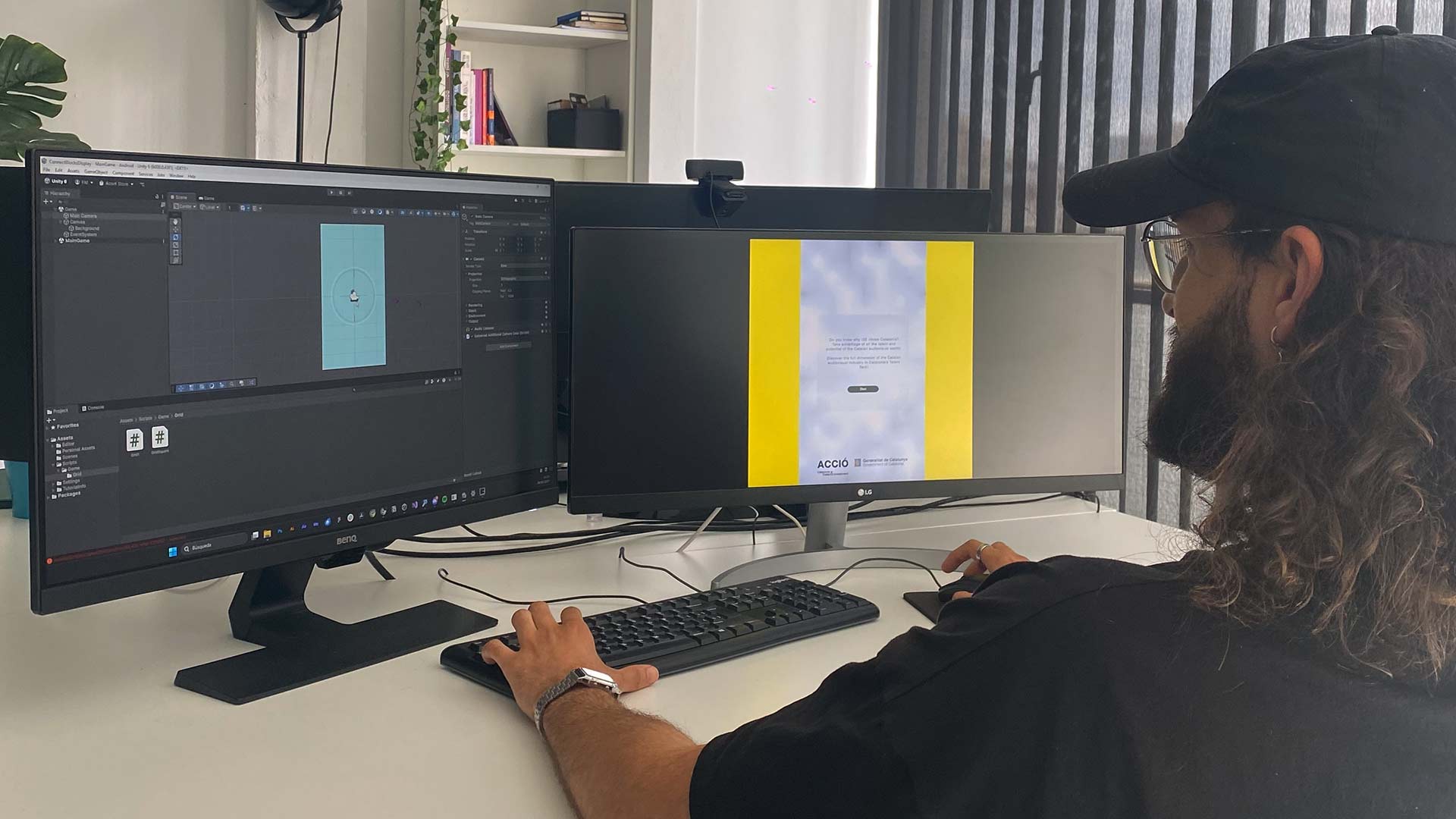

This installation we did for ISE 2025, transformed data from the Catalan audiovisual ecosystem into generative visuals in real time. The interaction point was a tactile totem from which visitors answered questions about the sector. Their answers activated an algorithm developed in Unity that modified in real time the display shown on a curved LED screen.

The visualizations were fed by a database with information from 4,161 companies in the sector, represented as particles on a curved LED screen. Each interaction modified the visual composition instantly using Notch VFX, turning the information into an immersive and dynamic experience.

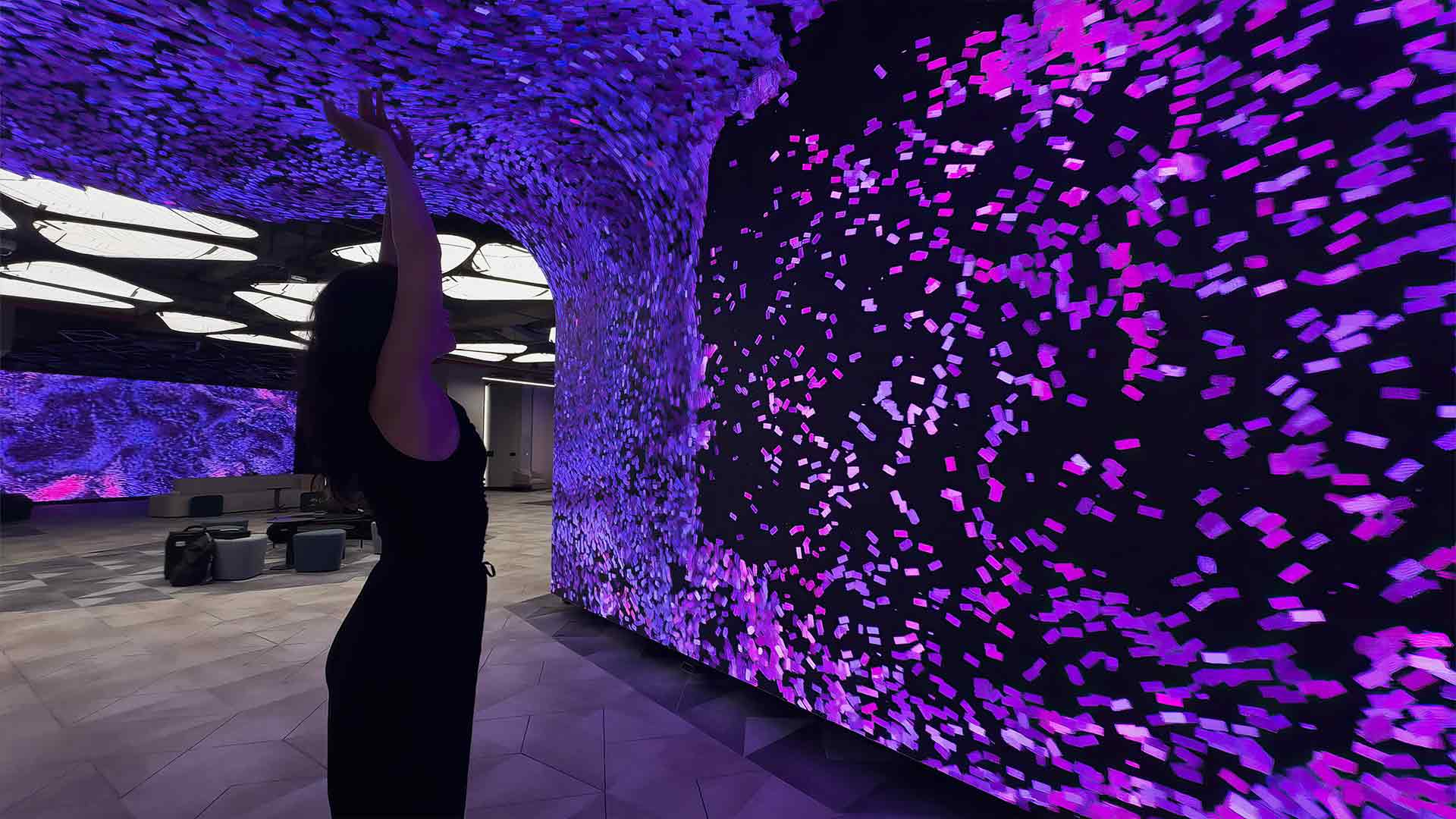

2. Tonomus Neom – Interactive Installation in Saudi Arabia

This project allowed us to develop an enveloping space where interactive sensor technology transforms environmental perception. We created an immersive corridor for offices in NEOM with curved LED screens responding to visitors’ movements. Using Kinect cameras, we captured silhouettes and gestures, generating particle graphics in Notch VFX evolving in real-time. Some screens displayed dynamic images reacting to movement, while others remained static to balance the visual atmosphere.

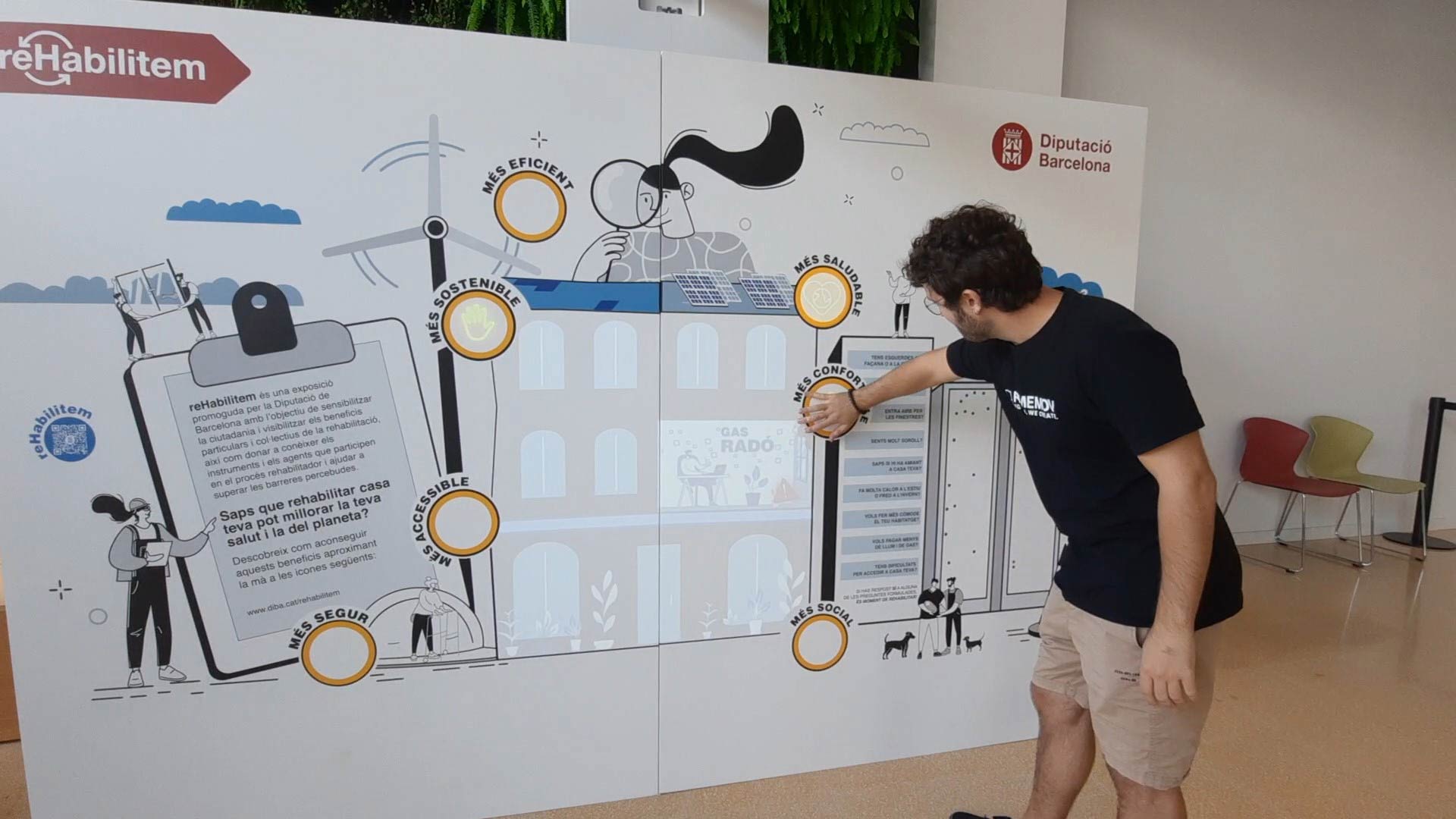

3. Rehabilitem – Interactive exhibition

ReHabilitem is an exhibition designed to bring the public closer to the world of space rehabilitation in a clear, accessible and visual way. A tour where interactive sensors become the key tool to activate content and guide the visitor.

Panel 1: Activating contents through touch

In this first panel, visitors could discover the main concepts of rehabilitation by activating audiovisual projections through tactile sensors. In addition, a small manual dynamic invited to answer questions, generating a direct interaction and guiding the public to the next space.

Panel 2: Personalized information at a single touch

In the second panel, sensors enabled the activation of personalized messages about the different agents involved in a rehabilitation process. A simple and intuitive system that allowed each visitor to explore the content at their own pace and according to their interests.video audio

The Technology Behind Our Interactive Experiences

To make these experiences possible, we combine interactive sensors with advanced software that enables real-time data processing. Some key tools we use at Framemov include:

- Notch VFX and TouchDesigner (real-time graphics creation)

- Unity and Unreal Engine (interactive 3D environment development)

- Kinect and Lidar sensors (accurate motion and depth detection)

The Future of Digital Interactivity

The evolution of mixed reality, artificial intelligence, and immersive technologies continues expanding the possibilities of interactive sensors. At Framemov, we continue exploring new ways to integrate these tools into innovative projects, ranging from corporate events and immersive experiences to installations in museums and retail spaces.

The viewer is no longer just an observer but an active part of the artwork. With each gesture, movement, and interaction, the experience redefines itself in real-time.

If you want to discover how interactive sensors can transform your next project, contact us at Framemov and let’s innovate together.

Erik Fernandez – Community Manager